LLMChatElement

The LLMChatElement is a core component for integrating Large Language Models (LLMs) into chat applications. It handles the communication with LLM providers and generates responses based on input messages. Its interface is meant to receive list[MessagePayload] and respond with a MessagePayload in turn.

Instantiation

LiteLLM

Under the hood, this element uses LiteLLM and the standardized model names used by APIs of various providers. When this Element is instantiated, unless a env_path is provided, it will look in the working directory for an .env file.

APIs

We rely on the standard API key names such as OPENAI_API_KEY, ANTHROPIC_API_KEY, XAI_API_KEY, et al to be present in the .env file depending on the model you wish to use. For more on authentication, see the docs here.

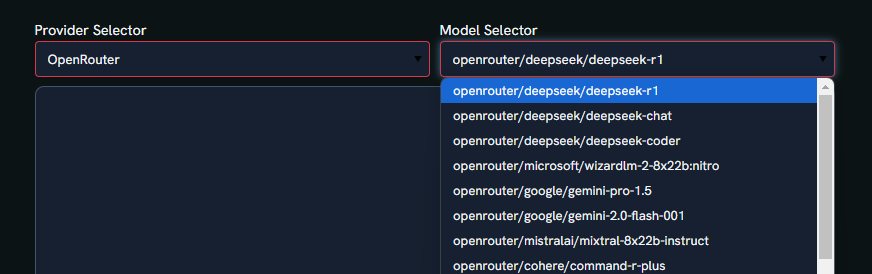

OpenRouter

If you wish to try out many models with a single API key, we recommend you use OpenRouter, which is supported by LiteLLM.

Local

Alternatively, you can also run local models(ollama, VLLM, etc), where you need to include the name and the base_url. See more on that here.

Arguments:

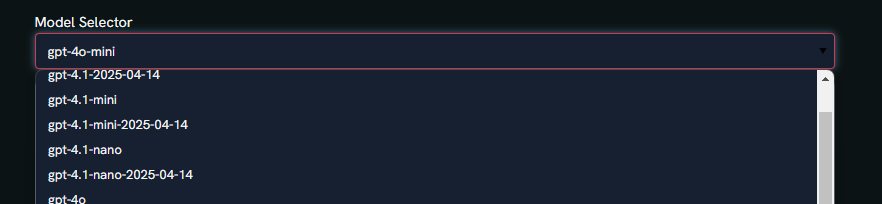

model_name: str = ‘gpt-4o-mini’

The name of the model to use for the LLM. model_args: dict = {}

Additional arguments to pass to the model. base_url: str = None

The base URL for the model. Optional, to be used with custom endpoints. output_mode: Literal[‘atomic’, ‘stream’] = ‘stream’

Whether to return the message containing a streaming callback or an atomic one env_path: str = None

Path to the .env file to load. If not provided, the .env file in the current working directory will be used.

Input Ports

| Port Name | Payload Type | Behavior |

|---|---|---|

| messages_emit_input | Union[MessagePayload, List[Union[MessagePayload, ToolsResponsePayload]]] | Processes incoming messages or lists of messages/tool responses to generate an LLM response which is emitted from the message_output port. |

Output Ports

| Port Name | Payload Type | Behavior |

|---|---|---|

| message_output | MessagePayload | Emits a MessagePayload containing the LLM’s response to the next element. |

Views

(See here for styling options)